As a CTO, one of the biggest decisions you make is knowing when to launch your product. It’s not just about building features; it’s about making sure everything works smoothly in actual use. That’s why conducting a QA readiness assessment is very important. It helps you check if your software has been properly tested for things like performance, bugs, security, and how it behaves under pressure.

Skipping this assessment can lead to serious problems after launch. In fact, 40% of companies face product failures because of incomplete testing or missing QA plans.

This blog gives you a simple testing checklist with 10 key questions every CTO should ask before going live. You’ll also learn how working with expert software testing services can give your team the extra support it needs.

Table of Contents

What Is a QA Readiness Assessment?

A QA readiness assessment is like a final check-up for your software before it goes live. It helps you figure out if your product is really ready for users—or if more testing is needed.

This assessment looks at things like:

- Are all the important features tested?

- Is your testing team prepared?

- Have you checked for bugs, speed issues, or security risks?

- Is your testing environment similar to your live environment?

Think of it as a safety checklist. Just like a pilot goes through pre-flight checks, your team needs to go through this QA checklist to avoid surprises later. It gives you a clear picture of how strong your testing process is and what might still be missing.

For CTOs, this step is a smart way to reduce risk and launch with confidence.

Why a Testing Evaluation Matters Before Launch

Before releasing your software, a testing evaluation helps you assess if your QA efforts have truly covered the system’s critical paths and edge cases. It’s not just about passing test cases; it’s about validating the quality, reliability, and stability of your product under different conditions.

Here’s why it matters technically:

- Functional Coverage: Have all the user stories and modules been tested against business logic? An evaluation checks if each function behaves as expected; not just in isolation but also when integrated.

- Regression Testing Confidence: Have recent changes broken older features? Skipping regression tests is a major risk, especially in agile deployments where frequent updates are pushed.

- Performance Metrics: Is the system optimized for load time, server response, and throughput? Without stress testing and load simulation, your app may crash during peak traffic.

- Security Validation: Have known vulnerabilities (e.g., SQL injection, XSS) been tested? A quick pen test isn’t enough; you need to evaluate whether your app follows OWASP standards.

- Environment Parity: Does your staging mirror production? Many bugs appear only because of mismatched configurations, missing variables, or third-party service limits in lower environments.

Conducting a testing evaluation before launch reduces production bugs, prevents escalations, and gives your stakeholders data-backed confidence. It aligns your QA strategy with release goals, so no critical checks are missed in the rush to ship.

The Essential Testing Readiness Checklist: 10 Questions Every CTO Should Ask

Before approving a release, a CTO needs full visibility into the QA pipeline; not just progress reports. This testing readiness checklist will help you evaluate whether your team, tools, and test coverage are aligned with your go-live goals.

Each question below is tied to a critical QA pillar: test strategy, coverage, automation, performance, security, and environment parity.

1. Do we have a documented QA strategy aligned with business goals?

It’s not enough to run tests; you need a strategy that outlines what will be tested, when, and by whom. Your QA strategy should define entry/exit criteria, test levels (unit, integration, system, UAT), and priority flows. It should reflect real user journeys and business risk.

2. Are we achieving meaningful test coverage across critical modules?

Ask your QA lead for metrics like statement coverage, branch coverage, and requirement traceability matrices (RTMs). Good coverage isn’t 100%—it’s targeted. Focus on high-impact areas such as payment gateways, onboarding flows, and authentication modules.

3. Have we implemented regression automation for stable, repeatable builds?

Automation isn’t just about speed—it’s about consistency. Confirm that your regression suite is integrated into your CI/CD pipeline (e.g., Jenkins, GitHub Actions) and can auto-trigger on code merges. Use tools like Selenium, Cypress, or Playwright for browser flows, and REST-assured or Postman for APIs.

4. Is the staging environment fully synced with production configurations?

This includes database schemas, third-party APIs, environment variables, and deployment architecture. Discrepancies here often cause environment-specific bugs. Use Infrastructure as Code (IaC) tools like Terraform or Ansible to maintain parity.

5. Have we performed end-to-end (E2E) testing across key user journeys?

Don’t just test isolated functions—verify real-world usage. E2E testing should simulate full flows like “user signup → email verification → payment → dashboard access.” Tools like TestCafe or Puppeteer can help automate these flows.

6. Have performance and load tests been executed with real usage patterns?

Validate system behavior under stress. Use JMeter, Gatling, or Locust to simulate user loads. Focus on KPIs like response time (under 2s), throughput (requests/second), and error rate (<1%). Identify memory leaks, DB bottlenecks, or thread pool exhaustion early.

7. Is security testing complete for both static and dynamic layers?

You need both SAST (Static Application Security Testing) and DAST (Dynamic Application Security Testing). SAST checks source code for vulnerabilities (e.g., using SonarQube), while DAST simulates attacks like SQL injection or XSS using tools like OWASP ZAP or Burp Suite.

8. Are defect triage and resolution workflows effective and traceable?

Track defect life cycles across tools like Jira, Azure DevOps, or Bugzilla. You should have clear SLAs for critical vs minor bugs and regular triage meetings. Metrics like Mean Time to Detect (MTTD) and Mean Time to Repair (MTTR) offer insight into QA efficiency.

9. Are test data and mocking frameworks in place for edge cases?

Use realistic test data for valid results. For external dependencies (e.g., payment gateways or shipping APIs), use mocking tools like WireMock or MockServer to simulate responses. This allows stable testing without hitting real services.

10. Do we have a rollback or hotfix strategy in case of production failures?

Even with perfect QA, issues can happen. Have a rollback plan or feature flag system in place using tools like LaunchDarkly. QA should be involved in validating rollback flows or emergency patches before they’re deployed.

How a Quality Checklist Saves You from Product Failure

A well-defined quality checklist acts as a safety net that protects your product from avoidable failures after launch. It brings structure to your QA process by ensuring all critical aspects such as functional accuracy, regression stability, performance thresholds, and security vulnerabilities are tested thoroughly before deployment. Without it, teams often miss edge cases, overlook integration issues, or skip validating real-world scenarios under pressure.

A checklist also keeps your testing aligned with business goals, helps track progress across sprints, and enforces accountability within cross-functional teams. By using a QA readiness checklist, you reduce the risk of post-release incidents like app crashes, data leaks, or feature failures, giving your product a higher chance of success in production.

When to Bring in Expert Software Testing Services

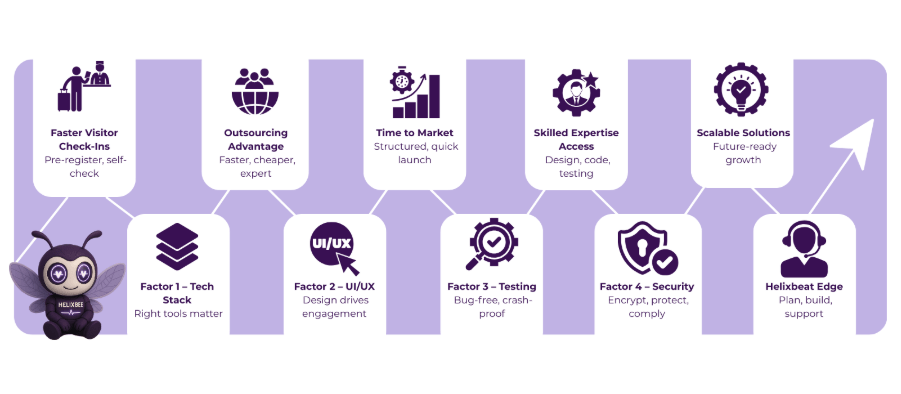

Software testing services are useful when you need to scale quickly, test complex systems, or launch under tight deadlines. Here’s when it makes the most sense to partner with a professional testing team:

1. When Your Internal QA Bandwidth Is Limited: If your testers are stretched thin or juggling multiple projects, quality can slip. External testing partners can take on regression, performance, or UAT testing without adding pressure to your internal team.

2. When You’re Launching a High-Stakes Release: Whether it’s a new product, a major feature, or a public launch, expert QA teams can run deep validation cycles, simulate edge cases, and reduce your risk of failure in production.

3. When You Need Specialized Testing Skills: Areas like security testing, performance testing, or API testing require advanced tools and experienced testers. A testing service brings these skills on-demand.

4. When You’re Scaling Quickly and Need Test Automation: As your product grows, manual testing alone isn’t enough. Testing services help you build and manage automation frameworks, integrate with CI/CD pipelines, and keep your releases efficient.

Choosing the right software testing services is a strategic move that helps you launch faster, smarter, and with more confidence.

Launch Your Product Successfully with Helixbeat

At Helixbeat, we help tech teams and CTOs launch better products with the support of expert software testing services. We know that building a product is only half the job; the other half is making sure it works perfectly when your users start using it.

Our team supports you with everything from a full QA readiness assessment to finding bugs, testing speed and performance, and setting up automation. Whether you’re about to launch something new or just want extra testing help, we fit right into your team and work with your tools.

With Helixbeat, you get the right guidance, a clear quality checklist, and strong testing support so you can launch your product confidently without worrying about last-minute problems. Get a Custom Software Testing Services Quote.

FAQ:

1. What are software testing services?

Software testing services involve evaluating software to ensure it functions properly, meets requirements, and is secure. These services include bug detection, performance testing, and security validation.

2. What are the types of software testing?

The main types of software testing are Functional Testing (verifies software features work as expected), Performance Testing (checks speed and scalability), Security Testing (identifies vulnerabilities), Usability Testing (ensures user-friendliness), Regression Testing (checks if new changes affect existing features), and User Acceptance Testing (UAT) (validates user needs).

3. Define QA strategy planning

QA strategy planning is the process of outlining how software testing will be conducted, including defining objectives, tools, and team responsibilities to ensure thorough and effective testing.

4. Why is a testing checklist important for a CTO?

A testing checklist helps a CTO ensure every critical aspect of the product, such as functionality, security, and performance, is thoroughly tested before launch, reducing the risk of post-release issues.

5. What is a CTO testing guide, and why is it important before launching a product?

A CTO testing guide is a roadmap that outlines the necessary testing steps to ensure software is fully validated before launch. It helps identify risks, maintain quality, and ensure the product meets all requirements before going live.